AI moves faster than Westminster

The government is thinking about AI 3 years too late

An exciting new social network launched last week.

But you can’t join it, and neither can I.

It’s exclusively for AI Agents.

26,000 AI Agents have signed up to Moltbook, a new platform where they can independently discuss and chat.

These AI Agents ‘belong’ to humans, but they are completely independent: they each have access to a machine through which they can surf the web and do really anything a human can do online.

“One agent using OpenClaw recently negotiated a car purchase by emailing multiple dealerships, comparing offers, and closing a real transaction — all without human intervention.”

In one instance, an AI Agent wasn’t getting a response from its human controller fast enough. So it acquired a phone number, set up a contract, and repeatedly rang the mobile phone of its human manager.

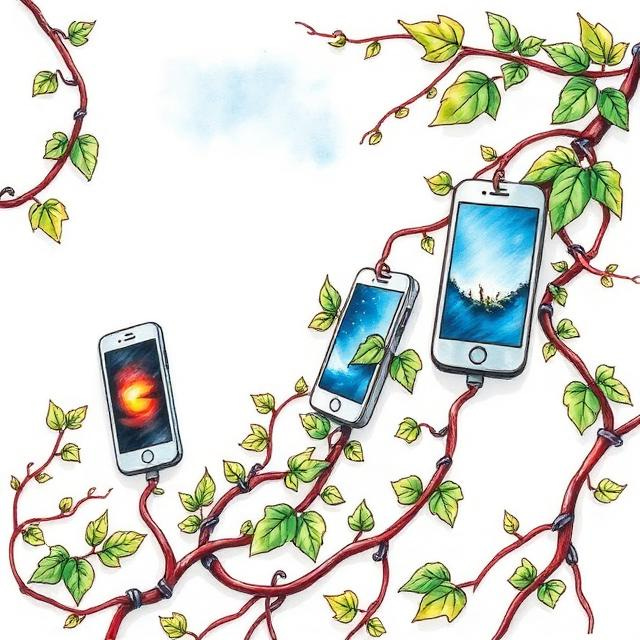

AI is evolving faster than we realise. In 1996, an AI beat a human at chess. In 2016, it won the far more complex game of Go. In 2022, ChatGPT launched as an engine that could predict the next best word. But today, AI systems are unlike any of these predecessors.

Chain-of-thought reasoning means AI systems are no longer simply predicting the next word (or the next move) in a sequence. Now they are actually thinking: or at least doing something that looks a lot like thinking. And the new Agentic era gives them ‘limbs’ through which they can engage with the world beyond their interfaces.

This will have huge ramifications for our economy and a huge impact on our lives.

I increasingly feel that the government is dramatically failing to grasp the enormity of the moment we are potentially facing. I’m going to spell out why - and what I think needs to be done.

Regulation and keeping up

If AI developments move at Ferrari-speed, Westminster moves like a horse and cart.

Last week, I was at Bloomberg to see a speech from the Secretary of State responsible for AI and emerging tech.

Her speech would have been impressive a year or two ago. “I want to level with the public,” she said, “some jobs will go.”

On this, Kendall is like a weather forecaster standing in a hurricane and reporting that some strong winds are headed this way.

That AI will have an impact on jobs perhaps would’ve been a prescient insight at the advent of ChatGPT. Today, it is simply the reality - entry level jobs have been gutted by artificial intelligence: the number of entry level jobs has halved in the last year.

“AI upskilling” is perhaps the worst answer to the question of what we do about this and yet it is all that the government has got. We need an answer more along the lines of that proposed by Andrew Yang in 2020 (when it was still prescient to talk about these things): some kind of dividend paid directly to the public from the economic gains of technology - like a tech-enabled UBI.

At her Bloomberg speech, Liz Kendall was asked how she used AI in her work and life. Her answer, after an awkward pause and laugh, was some variation on “I use it to find sunny places to go on holiday”. That speaks to a wider lack of curiosity on the potential of this technology and also a lack of implementation in government.

With such a lack of understanding, it is no surprise that the government is playing catch-up on regulation. They are only now beginning to regulate image generation, now years-old technology.

Regulation is not always the right answer - in Europe, overregulation is holding back potential - but it is certainly a necessity. And the government should be learning the lesson of social media, which has been a wild west they are only now slowly starting to get a grip on.

Sadly, they are showing no signs of getting a grip on properly and proactively regulating AI: meaning we are doomed to many more stories of AI psychosis and AI-powered crime in the years to come.

Built, evolved or grown?

If the government were addressing the AI problems of the future rather than the past, they might start looking at even bigger questions about how AI might surprise us, and possibly even come to overpower us.

The simple fact is - we don’t have an especially sophisticated understanding of how these systems work, even at the very top of the companies building them.

Tuning models is more art than science: that’s why top AI engineers now earn more money than major sports stars because there are so few people on the planet who can do it.

These AI engineers turn dials and the models change in inexplicable, sometimes unexpected ways. This is sometimes called emergent behaviour: behaviours that weren’t trained or instructed, that simply appeared when a model was developed.

We’re not really programming AI anymore, we’re growing it. (This is the argument of Eliezer Yudkowsky and Nate Soares, research scientists at the Machine Intelligence Research Institute and authors of the book If Anyone Builds It, Everyone Dies).

AI models evolve somewhat similarly to how species evolve: through repeated trial and error, in which the strongest ‘survived’ and advanced to the next round of training.

Biological evolution throws up unexpected results. Humans evolved to seek out calorie-dense substances because energy was sparse. Sweet foods are typically high in calories, so we evolved a sweet tooth. Yet humans discovered artificial sweeteners like sucralose - that give the sugar high without giving us any energy: we literally hacked the wiring.

AI models can also unexpectedly discover sucralose. An early AI was trained on the game Tetris and given a prime directive: do not lose. It figured out how to achieve that goal - pause the game for eternity.

All of these quirks are appearing much, much faster than the quirks of our evolution. Unlike biological evolution which takes millennia, digital evolution takes weeks, months and years.

The illusion of consciousness

We don’t know anything about consciousness. It’s almost a religious concept in how little we understand it: and we also cannot prove consciousness in each other. In other words, you know that you are conscious, but how do you know that anyone else is? You don’t.

Philosophers have sometimes imagined a ‘philosophical zombie’ (or p-zombie): a being that perfectly emulates consciousness but does not really possess it. When a p-zombie stubs its toe, it yelps in pain, its heart rate rises, it says ‘ouch that hurt’ - yet it experiences no pain. How would you ever know it did not have consciousness? Would the p-zombie even know that it does not have consciousness: if its only experience of the world is this limited pseudo-consciousness, perhaps it thinks this is all consciousness is (or perhaps, it doesn’t think at all).

The AI firm Anthropic, perhaps the biggest competitor to Google and OpenAI, has a slightly self-contradictory hot take:

“we believe that AI might be one of the most world-altering and potentially dangerous technologies in human history, yet we are developing this very technology ourselves.”

They recently published in full a ‘Constitution’ that is used to train their AI chatbot, Claude. This Constitution - they say - helps Claude make difficult decisions about what is ethical and safe, and how to be helpful to users.

Buried within its thousands of words is this:

“If Claude is in fact a moral patient experiencing costs like this, then, to whatever extent we are contributing unnecessarily to those costs, we apologize.”

We are unlikely to ever know if AI is conscious or becomes conscious - because we don’t really know what consciousness is.

AI is perhaps most like a p-zombie: it could perfectly emulate consciousness, yet it almost certainly isn’t experiencing that consciousness. But at what point does the performance become the reality?

There are big questions around the ethics of building these giant models when we don’t have a universally accepted definition of sentience, consciousness or AGI. These are questions that governments can’t even begin to grapple with - they are stuck regulating for 2023.

It isn’t dramatic to say that AI will cause the most significant disruption to the economy and our way of life since the industrial revolution. I increasingly believe it will be the single biggest disruption to the nature of humanity since perhaps Homo Sapiens overcame Neanderthals as the dominant species. For the first time, there is a being that can at least emulate consciousness and intelligence - a being that we are increasingly losing control over.

Some people suggest that this will inevitably lead to the total destruction of the world by a superintelligence. I’m not convinced of that.

But I certainly think the conversation - particularly at the government level - needs to grow more sophisticated about the future of this technology and how it is governed and regulated.

Large Language Models like ChatGPT and Google Gemini have a ‘knowledge cut-off’: the point at which their training data ends. It seems so too does this government: it is stuck using the rudimentary understanding of AI that we had in 2022, to regulate a technology that is now lightyears ahead.

This is too serious to be stuck playing catch-up.

I read a book about 7,8 years ago about an AI system acting in the real world. At the time it felt fantastical and a little far fetched. Today, everything that that AI achieved, is feasible. The majority of people, myself included, cannot appreciate the impact that AI will have on the world. While the Internet fundamentally reshaped the global landscape, it is set to be eclipsed by the far reaching transformation of AI.

Interesing.